Tag: emc

-

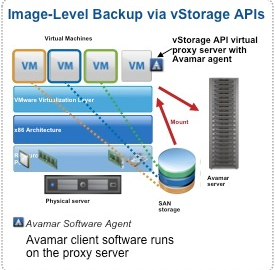

Avamar 6.1 Unified Proxy Appliance for VMware

I’m going back over some of the new differences in Avamar 6.1 and am very impressed with the enhancements that Avamar now has with VMware image based backups. Before version 6.1 you needed a seperate image proxy for Linux and a seperate proxy for Windows, now with the new proxy design both Operating Systems have…

-

EMC ICSEA Isilon Certified

Posting up the next certification as I’m now completed with 2 of 3 of my goal for the month. Didnt seem like there was too much difference on the training between the ICSEA and the ICSA, but I’m going to assume the technical certifications will be much different. I’m not one to hang up physical…

-

Warning 6698 VSS exception code 0x800706be thrown freezing volumes – The remote procedure call failed

When you try to create shadow copies on large volumes that have a small cluster size (less than 4 kilobytes), or if you take snapshots of several very large volumes at the same time, the VSS software provider may use a larger paged pool memory allocation during the shadow copy creation than is required. If…

-

EMC VNXe Data Protection with Symantec Backup Exec

EMC recently launched their new VNX storage line which is a unified storage platform with one management framework supporting file, block, and object optimized for virtual applications. From that line they also produced a more affordable “VNXe” model for small business and remote offices. There are a number of ways to protect the VNXe including…

-

Unable to Connect to the OpenStorage Device

Error: Unable to connect to the OpenStorage device. Ensure that the network is properly configured between the device and the media server. Environment: Backup Server: Symantec Backup Exec 2010 R3 OpenStorage Device: DataDomain DD670 running 5.0.0.7-226726 Today I was configuring DDBOOST for Backup Exec 2010 R3 using a replicated pair of DD670’s. The DD670’s were…

-

Troubleshooting: A checkpoint validation (hfscheck) of server checkpoint data is overdue.

How to troubleshoot EMC Avamar Event ID 114113 A checkpoint validation (hfscheck) of server checkpoint data is overdue.

-

How to check node capactiy across an EMC Avamar grid

If you think your EMC Avamar grid is filling up close to capacity here are a couple ways to check all of the nodes for space used and disk space available.

-

Avamar Agent now standard on Iomega ix-12 array

EMC Avamar agents are usually not found running directly on storage arrays, until now. At EMC world Iomega gave a sneak preview into its ix12 array with a built in Avamar agent. The Avamar agent will allow for SMB remote offices to utilize Avamar’s deduplication features without requiring additional hardware.