Category: Technology

-

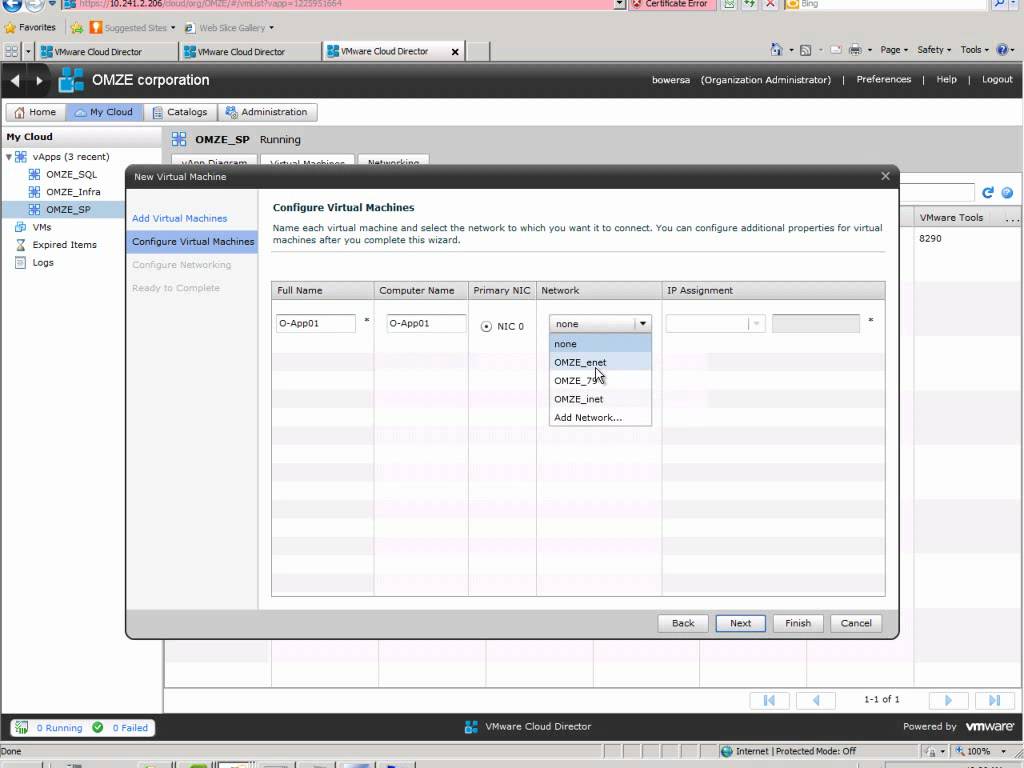

Creating a new VM in vCloud Director

I just recently stumbled upon a new Youtube user called EMCProvenSolutions Looks like guys from EMC Ireland have put together a couple really good video series mostly on VMware and some replication methodologies. Go check them out. I love the Irish narration.

-

New Symantec Video on Source based Dedupe

When it comes to deduplicating your data, a smarter approach is to dedupe at the source. Why wait until all that data gets to storage to dedupe it? There is a better way. In this video see how we to find a better way to use Mini Coopers as an analogy for data storage. Symantec…

-

EMC VNXe Data Protection with Symantec Backup Exec

EMC recently launched their new VNX storage line which is a unified storage platform with one management framework supporting file, block, and object optimized for virtual applications. From that line they also produced a more affordable “VNXe” model for small business and remote offices. There are a number of ways to protect the VNXe including…

-

Unable to Connect to the OpenStorage Device

Error: Unable to connect to the OpenStorage device. Ensure that the network is properly configured between the device and the media server. Environment: Backup Server: Symantec Backup Exec 2010 R3 OpenStorage Device: DataDomain DD670 running 5.0.0.7-226726 Today I was configuring DDBOOST for Backup Exec 2010 R3 using a replicated pair of DD670’s. The DD670’s were…

-

Troubleshooting: A checkpoint validation (hfscheck) of server checkpoint data is overdue.

How to troubleshoot EMC Avamar Event ID 114113 A checkpoint validation (hfscheck) of server checkpoint data is overdue.

-

Avamar Backup Job fails with error code 10007

avndmp Error 9469 Snapup of “ndmp-volume-name” aborted due to Error during NDMP session

-

How to shutdown Avamar

The following procedure gives you the steps to safely shutdown EMC Avamar services while avoiding a revert to checkpoint.