Tag: avamar

-

EMC Avamar SSL Cert Generation

After completing a successful root to root Avamar migration I noticed that the old SSL certs were still being used. Through some digging I finally found a very simple and easy command to update it. The gen-ssl-cert command installs a temporary Apache web server SSL cert and restarts the web server. gen-ssl-cert [–debug] [–help] [–verbose] Note: You…

-

Avamar Backup of a Windows VM fails with the error: Protocol error from VMX

Recently on a new install of Avamar version 6.1; I had a VMware Image based backup fail with error 10007. Upon further investigation of the backup job log I noticed that the snapshot failed with: A general system error occured: Protocol error from VMX. This is a good example of two very vague errors on…

-

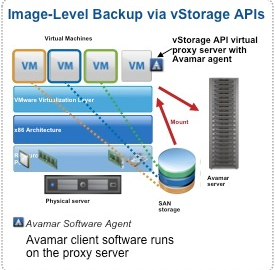

Avamar 6.1 Unified Proxy Appliance for VMware

I’m going back over some of the new differences in Avamar 6.1 and am very impressed with the enhancements that Avamar now has with VMware image based backups. Before version 6.1 you needed a seperate image proxy for Linux and a seperate proxy for Windows, now with the new proxy design both Operating Systems have…

-

Warning 6698 VSS exception code 0x800706be thrown freezing volumes – The remote procedure call failed

When you try to create shadow copies on large volumes that have a small cluster size (less than 4 kilobytes), or if you take snapshots of several very large volumes at the same time, the VSS software provider may use a larger paged pool memory allocation during the shadow copy creation than is required. If…

-

-

Troubleshooting: A checkpoint validation (hfscheck) of server checkpoint data is overdue.

How to troubleshoot EMC Avamar Event ID 114113 A checkpoint validation (hfscheck) of server checkpoint data is overdue.

-

Avamar Backup Job fails with error code 10007

avndmp Error 9469 Snapup of “ndmp-volume-name” aborted due to Error during NDMP session

-

How to shutdown Avamar

The following procedure gives you the steps to safely shutdown EMC Avamar services while avoiding a revert to checkpoint.

-

How to check node capactiy across an EMC Avamar grid

If you think your EMC Avamar grid is filling up close to capacity here are a couple ways to check all of the nodes for space used and disk space available.